rsc's Diary: ELC-E 2018 - Day 2

This is my report from the 2nd day of Embedded Linux Conference Europe (ELC-E) 2018 in Edinburgh.

Grabbing Audio and Video on a Board Farm

Krzysztof Opasiak runs board farms at Samsung. Board farms are both used for automated testing and for making boards available to the developers, because hardware is always rare in early project phases. Another use case in Samsung is to give developers access to devices without actually giving the (potentially secret prototypes!) new devices to them physically and maybe shipping boards around the world. He found that running board farms is almost like running a server room, so putting that into centralized places with staff available that can fix cables is a good idea. On the software side, he uses LAVA to manage his test queue.

On the hardware side, they decided that each DuT should be connected to one computer, called MuxPi; the architecture can be found on GitHub. Each device provides an API to access the hardware. The layer above, BORRUTA, takes care of organizing access to devices (similar to what labgrid does).

A MuxPi consists of a NanoPi (well, it's cheap) and does power supply, SD muxing and "dipers" (dynamic jumpers - relais for power-on switches etc.), plus an USB serial switch and the usual serial console. In addition, they found that it is a good idea to have a display, in order to show the board status.

Audio and video are not directly included, but there is an HDMI connector on the board with an EDID injector to fake monitors in case the DuT expects one. If you really want to grab audio and video, an add-on board is available with an LKV373a HDMI extender: it is normally used for distributing HDMI over Ethernet to synchronized monitors.

The chip streams video (in an mpeg-ish way with proprietary extensions) into the network, and it costs $30. The MuxPi then emulates keyboard and mouse, using the USB device controller on the NanoPi NEO. While sending keyboard events was easy, sending mouse events was CPU load intensive, so he better sends touchscreen events.

As there are issues with the LKV device, there might be a better option with the HDMI2USB, an Australian grabber project. However, that device is currently too expensive for their needs.

Drone SITL Bringup With the IIO Framework

Next, Bandan Das talked about software in the loop and the industrial I/O framework, which is one of his hobby projects back from university days. Today most drone controller hardware is based on microcontrollers, i.e. STM32, but that's not the right choice if you want to have more powerful hardware and algorithms involved, such as sophisticated video processing. He is using the Intel Aero Compute Board that consists of an x86 processor with a companion STM32 CPU for the actual flight control, which looks a bit like redundancy to him, so he searched for options to run the actual control code on x86 as well.

In a first step, he played with the SPI and I2C userspace drivers to access the sensors, but it would be better for system stability to have proper drivers, so he started working with the industrial I/O framework. The board has an inertia measurement unit, a 3-axis geomagnetic sensor and a pressure sensor; fortunately, there were already drivers available in the IIO framework.

IIO has an abstraction of "channels" which bundle the data from one sensor, "buffers" to transport the raw data and transport it into a ringbuffer with mmap support for userspace and "triggers". Triggers can be triggered by the same/another device or even by userspace, and they provide the events to attach your application to. In userspace, there is a shim layer library called libiio, making access to IIO devices easy to handle.

Next, he was dealing with SITL/HITL: the idea is that, other than directly working with the real hardware, it is possible to have software-in-the-loop and simulate the actual hardware, while the drone software thinks it is software from the sensors. The SITL code basically has to fill in data into the corresponding data structures; instead of getting that from real sensors, it can be pushed in and out via UDP network packets.

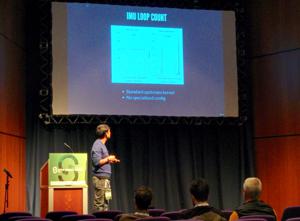

Currently, he doesn't have the whole flight stack running, but played with the loop speed. Unsurprisingly, an unpatched mainline stack showed spikes in the cycle time; however, isolating CPUs did already make the spikes go away. He has a todo list, which also includes testing the RT_PREEMPT kernel and doing more latency tests.

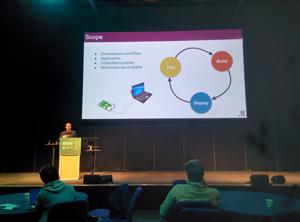

Deploy Software Updates for Linux Devices

Mirza Krak then talked about how to build, test and deploy embedded Linux firmware for devices. He first explained the overall Linux development environment and possibilities to transfer self written software to the devices; for the automated integration of his systems, he uses Yocto and the Ångström distribution.

For deploying firmware into production, he recommends using an image based update, because it is stateless: once you have flashed a device, you know what you are running. He is using mender for updating the systems; for local development, images can simply be loaded from a local server, but it does also scale towards bigger setups.

Unfortunately, he stopped explaining where the actually interesting things started, so playing with mender and finding out about its possibilities and limitations stays as an exercise for the listener.

Linux and Zephyr "talking" to each other in the same SoC

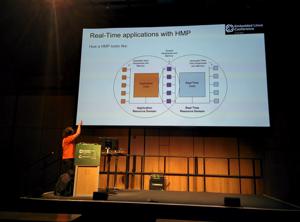

After lunch, Diego Sueiro talked about how "heterogeneous" processors on the same SoC can communicate. In recent times, more and more SoCs turn up which, besides the main CPU, contain a set of usually small coprocessors for dedicated realtime ore otherwise privileged tasks. For example, the processor Diego is working on is an i.MX7 from NXP.

While realtime Linux with RT Preempt might be a solution for some realtime tasks, having special controllers which only run the realtime load might be helpful for some use cases. The i.MX7 has a Cortex-A7 for Linux and a Cortex-M4 which can be used for companion work. For communication between the processors, there are hardware mailboxes and semaphores available.

The OpenAMP standard provides a set of software infrastructure with hardware support:

- remoteproc to control/manage remote processors (power off, reset, load firmware)

- RPMsg for inter processor communication (virtio)

- Proxy operations: remote access to system services

Diego explained the different hardware components which make those interfaces possible.

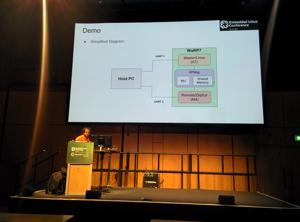

On the Zephyr side, there is a RPMsg-lite implementation available with a small footprint: currently it supports a queue and a name service which allows the communicating node to send announcements about "named" endpoints. He explained how to build the necessary components on Zephyr.

In his demo, he showed how to operate one serial interface from the Cortex-A and a second one from the Cortex-M, communicating via rpmsg.

Unfortunately, Diego didn't find our recent kernel patches that brought RPMsg support into mainline but used the outdated NXP 4.9 kernel instead, so the details outlined in the talk have to be taken with a piece of salt.

10 Years of Industrial IO

Jonathan Cameron then talked about his experiences of maintaining the IIO subsystem of the kernel for the last 10 years. It all started when he was trying to upstream some sensor drivers at that time, but there was no right abstraction for it in the kernel, so he asked on the kernel mailing list and got the answer: "you'll need to do something new". And so he started.

Over the time, all sorts of devices like ADCs, DACs, but also accelerometers, gyroscopes, magnetometers, IMUs, light chemical sensors etc. have been added. Basically, IIO's most important characteristic is it's userspace interface, which makes it possible to have hardware independent application code. Single-channel data is read from sysfs, but mass data can also be transferred via FIFOs implemented with character devices.

Some years ago, a mechanism has been added that makes it possible to use IIO devices not only from userspace but also from other kernel drivers, even from different drivers at the same time. Higher level drivers like touch screen can thus make use of low-level ADC interfaces. A lot of complexity has been added, but of course not all devices need that.

The biggest issue still is that it is difficult to predict the future. In the past, the maintainers have tried to stay compatible with current ABIs (like for hwmon), but that didn't always turn out to be a good choice. So deciding about the right level of simplicity vs. abstraction stays difficult, as one can't predict which future requirements may come up. Another common question is about how high performance devices fit in: instead of reading values one by one, there are use cases that require high performance DMA transfers with inline meta data, self describing flows etc.; these are currently not handled in mainline. Complex sensors with proprietary userspace interfaces, like pulse oximeters, are also not solved so far.

Besides the technical part of the story, there's also the community one. IIO started in staging, so it needed quite some discussions until the code was in "unstaged" mainline. In recent times, new drivers come in the normal way, but some drivers are still left in the staging area. Once the subsystem was there, quite a few companies, but also hobbyists and students were interested; over the time, more than 1000 authors contributed to the subsystem.

Weiterführende Links

Pengutronix auf der SPS in Nürnberg

Nach einigen Jahren Abwesenheit sind wir in diesem Jahr zurück auf der SPS 2025 in Nürnberg! Sie finden uns in Halle 6, Stand 6-350C. Wir freuen uns darauf neue und bekannte Freunde, Partner und Kunden zu treffen. Wie immer zeigen wir Demonstratoren zu aktuellen Themen an unserem Messestand.

GStreamer Conference 2025

This years GStreamer conference was held at the end of Oktober in London, UK. Since GStreamer is our goto-framework for multimedia applications, Michael Olbrich and me were attending this years conference to find out what's new in GStreamer and get in touch with the community.

Talks, Workshops und Zeit am Strand - Die Embedded Recipes 2025

Ich war dieses Jahr Teil einer kleinen Delegation Pengutronixianer, die an der Embedded-Recipes-Konferenz in Nizza, Frankreich teilgenommen haben. Wir hatten eine tolle Zeit in Nizza und wollen jetzt die Gelegenheit nutzen nochmal einen Blick zurück auf unsere Lieblingstalks und unseren labgrid-Workshop zu werfen.